Rotator

2019

Question: How do we celebrate the launch of our new brand in the physical world? How can people touch it, and play with it? Start with a circle.

Circular Logic

We explored a ring-based interface where users turn a giant wheel to control Buck brand expressions. From there we started putting together some parameters for a functional prototype.

We love physical interfaces, and this simple input was a great excuse to explore how physical, graphic design, and code can collide on a small scale. It also raised a lot of interesting questions when we began thinking about creating content. Adding the ability to scrub forwards and backwards changes a lot about traditional frame based animation. For instance, how do we deal with easing in an animation when the wheel has its own momentum? Will an animation still feel responsive played back at a variable frame rate? What about code sketches? How do we ensure they all live in the same design space?

The build...

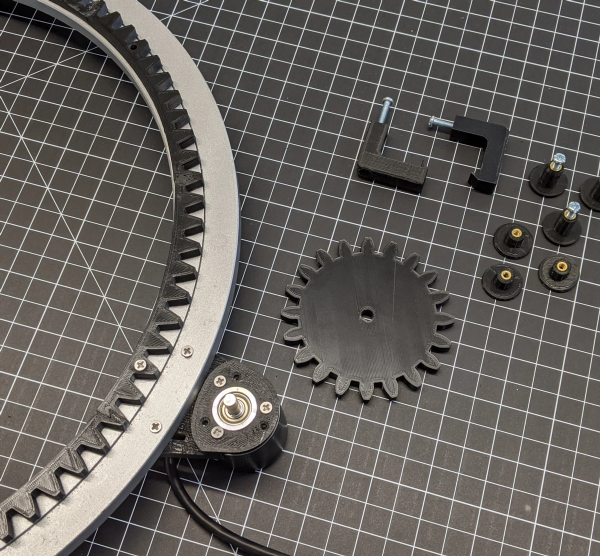

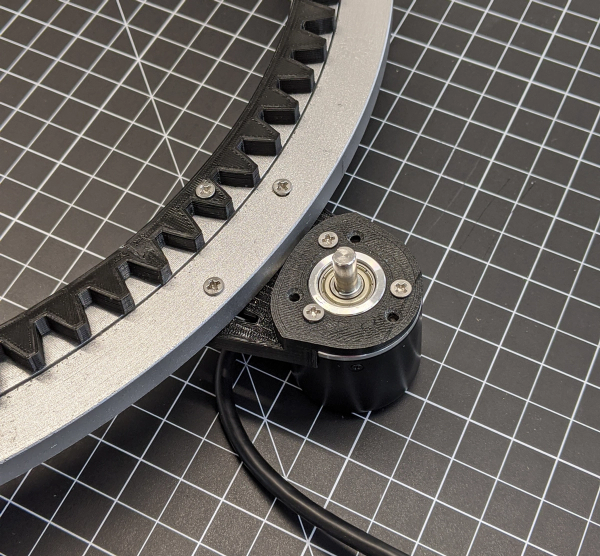

It was decided early on that this ‘thing’ should feel like a prototype, so we chose the ultimate prototype material, corrugated cardboard. Due to the need to see a screen through the middle, we weren’t able to rotate on a traditional axle. After a bit of searching, a lazy susan bearing was the perfect prototype solution. Some connective plastic pieces were 3D printed and we were in business. We had a lot of ideas about what this wheel could do, but first we needed to bring it a little closer to reality.

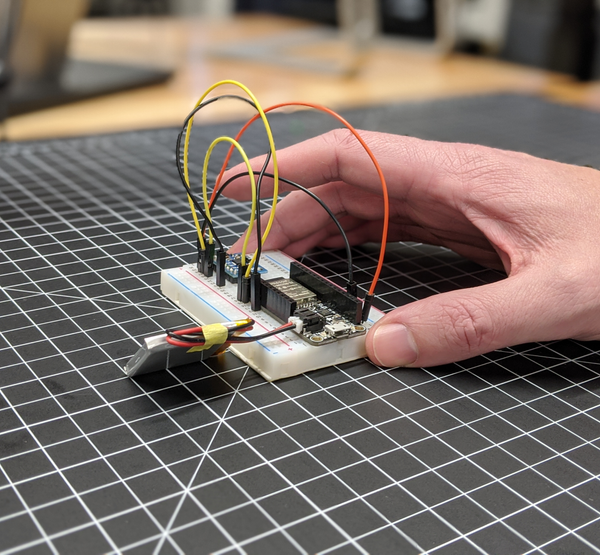

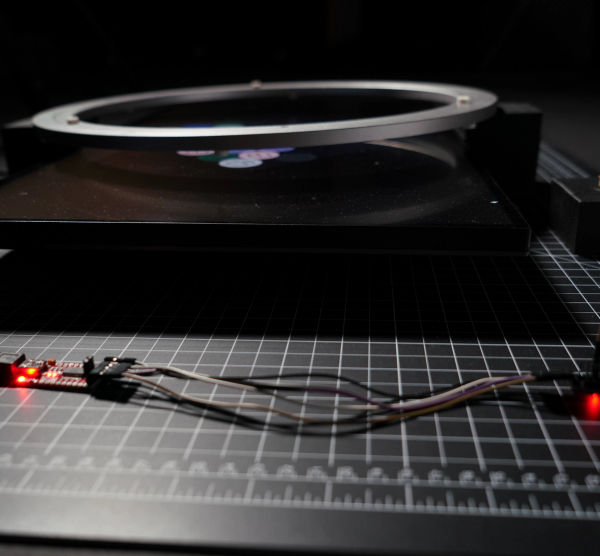

Measuring rotation was our next major challenge. We initially tested a wireless solution mounted directly inside the wheel.

This came in the form of an ESP32 microcontroller connected with a gyro that lived inside the wheel itself.

We wrote an app that talked to the ESP over wifi to get real time orientation feedback. However, it wasn’t quite realtime enough, and in the end it was scrapped for a faster and more precise solution.

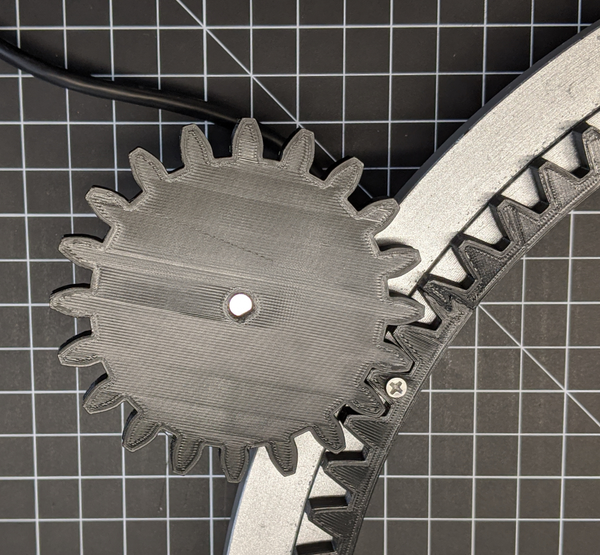

Enter the rotary encoder. We designed and 3D printed some meshing spur gears and connected it to our wheel in a 4.5:1 gearing. Our encoder pulses 600 times per revolution, and with our gearing it gave us accuracy down to 0.133 of a degree. Together with a wired connection, we finally got the accuracy and speed we wanted.

Code Sketches

Once we had a physical object to play with we turned our efforts towards design, animation & code. We started with three different code sketches to explore user interactions and see what felt right while using your hands to control animations and physics. These code sketches have been a great starting point to decide how we can design content for the screen & interface. From here we’ll be digging into each sketch even further to test how things like frame rate, scale, speed & inertia effect how users interact with Rotator. And by the way, it’s fun to throw things around.

The first sketch is an animation sequence playback engine that will allow us to plug any animation into a database and have Rotator control it in some way. Super collaborative for our traditional artists to contribute content to this thing.

The second exploration is a sketch for moving in and out of Z-space based on rotation.

Last is a physics engine that uses the glyphs from our new brand guidelines.

What’s Next?

If you’ve made it this far, and want to see where this goes, watch this page as we’ll be updating our progress as often as possible.

As for us, we’ve proven the wheel, as an interaction device, is worth pursuing. It asks very little from a user. They can just jump right in and start playing with it. It gives a lot in terms of payoff, exploration, & satisfaction.

From a fabrication view, we need to talk to engineers about what it would take to make this at the scale we want — roughly 5x larger. Things we need to consider include how the screen is mounted (inside or behind it), what it would take to mount it to a wall, and how we can make it turn with the right amount of resistance.

From a development perspective we need to explore what a fleshed out ecosystem would look like for managing multiple Rotators. How do we make it easy for designers & animators to test their content on the wheel without needing to code? What does a web based backend look like? How do we let coders sketch and be creative as well? There are many future integrations in store for Rotator, so we should think about a future where the wheels talk to one another, or other IOT devices, lights, and musical systems.

From a design standpoint we need to recommend guidelines on how to best create content with the wheel in mind. This includes fps considerations, as well as how to deal with rotating while playing back. If extra interactivity is needed we need to have ways for designers to easily simulate and previz what their effect will look like without needing a physical wheel to work on.